Illumination

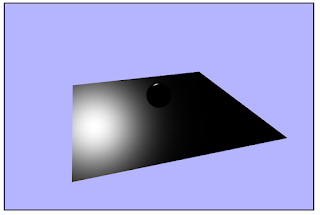

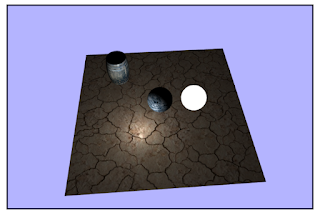

This week's material went over the perception of color relative to objects, the material properties of those objects, the surface normal of the objects, and the position of both the camera view and the light(s) in the scene. Different types of reflections that can affect the color of a pixel in the scene due to a light source are diffuse and specular reflections.

We also briefly touched on the concepts of light sources creating direct or indirect light. Direct illumination is the contribution of light that is directly traced to a light source (in a ray-tracing renderer, this is the equivalent of a single bounce of light, without calculating the contribution of further reflections). Indirect illumination or global illumination takes into account how light interacts with objects by allowing for a ray from a light source to bounce multiple times, potentially contacting multiple objects in a scene. In the rasterization pipeline, this is approximated using a constant value of ambient lighting.

|

| Specular (Phong) |

|

| Diffuse |

|

| Specular + Diffuse + Ambient |

Applications

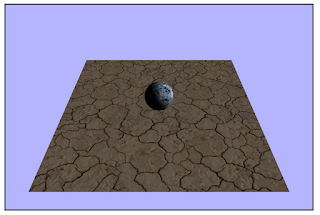

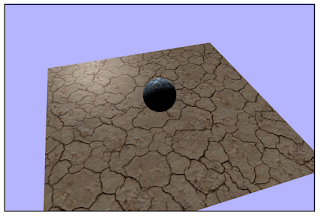

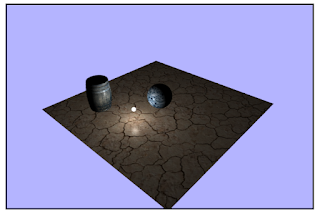

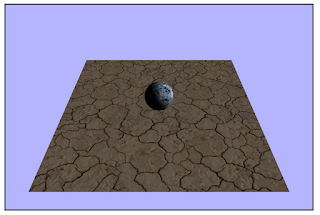

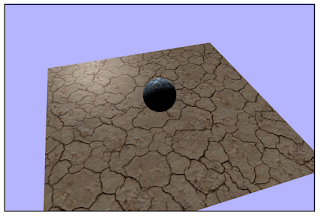

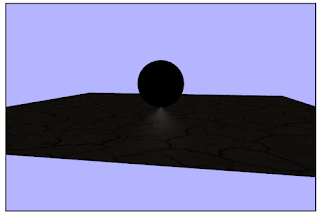

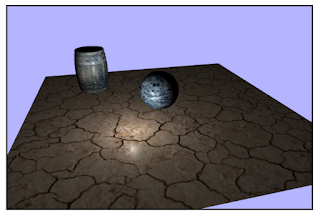

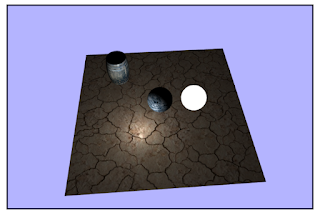

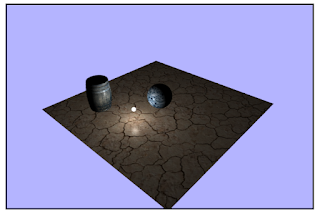

In the projects, we created a scene that represented the interactions between two different types of light sources and the geometry we colored from either texture or hardcoded flat colors. Directional light was used in the first project, shown in the pictures above, where light is represented in the implementation by a pure direction which remains unchanged throughout the pipeline process. Examples of directional light are the sun or the reflected light of the moon. The second project, shown in the pictures below, represents interactions between geometry and point light. Point lights are implemented first as a point in space, and each object in the scene has a unique direction to the point light from its world position. This dynamic direction and the object's normal surface values reflect different degrees of light.

|

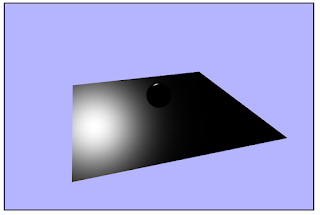

Removed any hint of interaction with the Directional Light, and

created a clean slate to build the Point Light.

|

|

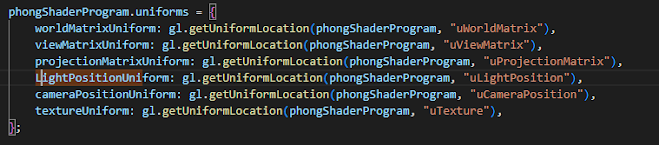

This was a failure. Hard coding the light position as a vec3 in the

point light fragment shader worked, but my uniform value

passed from app.js was not cooperating.

|

|

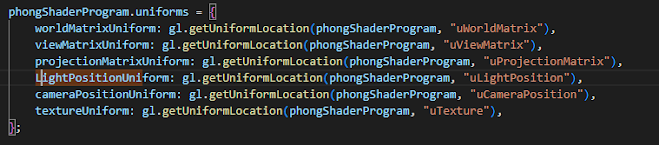

| Turns out JavaScript is case-sensitive. Who knew? |

|

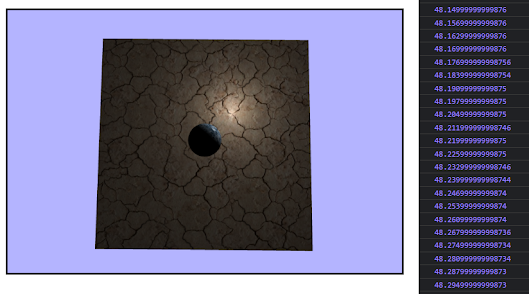

After fixing that funny business, I thought my values derived from rotating

the lightPosition about the y-axis weren't having an effect, and I wasted some

time trying to find out what I could have been doing wrong. As it turns out,

time.secondsElapsedSinceStart isn't the fastest way to actually see results. My

rotation was in fact having an effect, be it a very slow one. I would later change

my rotation matrix to be totally independent of time and instead use a constant

degree change per update call, but for now, this time value was simply scaled. |

|

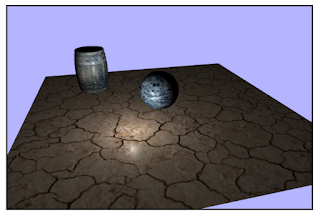

Enter: the barrel. This was straightforward, and it was interesting to add additional

objects to the scene and follow how that process would be different, and potentially

more cumbersome, given different or new types of geometry.

|

|

A new pair of shaders were created for the crudely emissive object representing the point

light utilized by an additional sphere geometry object. At this point, I only needed to

scale down the geometry and sync its position with the point light. Easy enough, right?

|

|

Well, sort of easy enough. It was at this point that I decided to alter how I was rotating the

point light's effect on the scene. Before changing this, I was incrementing the degree to which

the light position was rotated by a matrix using time, but my vector3 variable "lightPosition"

was not actually being changed at all. I was creating a new "lightPositionRotated" vector3 each iteration updateAndRender was called and assigned that variable's xyz values to the lightPositonUniform which was passed to the fragment shader. This felt off, and it made the process of transforming my sphereLightGeometry object's worldMatrix feel a little off. I was transforming based on the position of the lightPositionRotated values. This would only work in the scope of the updateAndRender call, and it made the solution feel limited and annoying to scale if I had to make other changes to the "lightPosition" variable in another function call, outside of the scope of the updateAndRender function. I instead changed the degree of rotation to be a constant instead of elapsed time (to avoid accelerating rotation) and had that transformation alter the "lightPosition" variable itself, removing the need for "lightPositionRotated." My sphereLightGeometry was then re-scaled then transformed to the location of the updated "lightPosition" for the intended visual result.

This explanation feels a little rant-forward. Does this change mean much? This result could have been reached with the original structure with an un-changing “lightPosition”, but would that break some sort of convention? Just a friendly dose of overthinking? Find out next time.

|

Comments

Post a Comment